Can artificial intelligence (AI) compose an original music score based on gestures? Can machine learning be used for music generation or digital music instrumentation? Can computers predict and choose appropriate music for a person's mental state? As part of its AI and Music initiative, the Institute for AI-Driven Discovery and Innovation has awarded seed grants to three multidisciplinary research teams to explore these possibilities.

An anonymous $50,000 donation to the AI Institute, to support activities at the convergence of AI and music, has made these seed grants possible. These grants will support projects over the course of the 2020-21 academic year.

"Both music and mathematics revolve around the generation and analysis of patterns," said AI Institute Director Steven Skiena. "AI and machine learning provide exciting new tools for working with music. The three exciting projects seeded by this generous donation bring together faculty from the computational sciences and humanities. I really like the sound of that."

The seed grants will fund the following projects:

Topic: Deep Representations of Music for Personalized Prediction of Mood and Mental Health

Team: H. Andrew Schwartz, Department of Computer Science; David M. Greenberg, Bar-Ilan Departments of Social Sciences and Music, and Cambridge University Department of Psychiatry; and Matthew Barnson, Department of Music

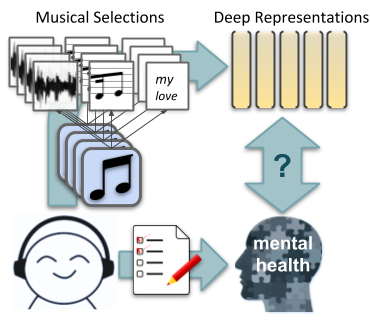

How does one effectively store music data such that machine learning can best make predictions from it? Musical scores can be digitized; audio files themselves have a pattern, and lyrics also contain useful information. One thing these all have in common is that they are sequences. This project, in part, seeks to leverage recent advances in natural language processing using deep learning to create "representations" of sequential music data.

How does one effectively store music data such that machine learning can best make predictions from it? Musical scores can be digitized; audio files themselves have a pattern, and lyrics also contain useful information. One thing these all have in common is that they are sequences. This project, in part, seeks to leverage recent advances in natural language processing using deep learning to create "representations" of sequential music data.

A long-term goal of this research, according to Professor Schwartz, is to use AI and music to positively influence the mental health of individuals. This would be done by either AI composing the music itself, or having it choose the right piece of music from among a selection that the person likes. Representations of each piece of music will be compared to the mood and mental health of individuals.

Music has been used by people to reflect their mood for centuries. This team seeks to fully understand the connection between music and psychological state, which will require a strong representation of the music itself. Recent advances in language processing, a data with much in common with music (sequential data used for communication) can be adapted to provide a powerful quantitative window into uncovering the mysteries of how music links to mood, and how that is different from one person to another.

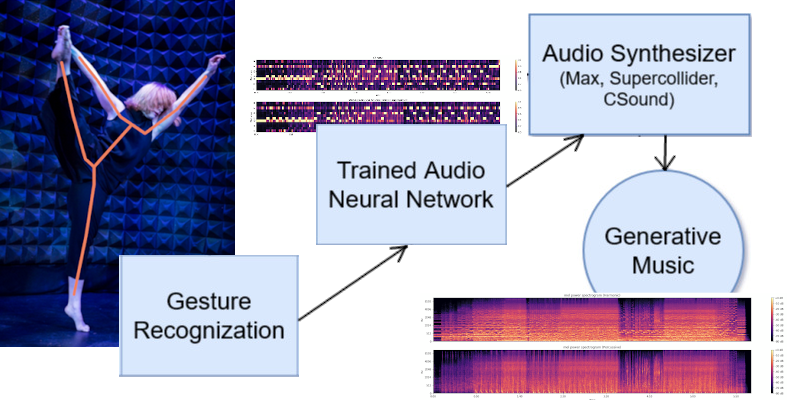

Topic: Creating Music Through Space - Spatial Gesture-Following Deep Learning Network for Long-Term Generative Composition

Team: Margaret Schedel, Department of Music; Dimitris Samaras, Department of Computer Science; Omkar Bhatt (M.S.) Department of Computer Science; Niloufar Nourbakhsh (Ph.D.) Department of Music; Robert Cosgrove (D.M.A.) Department of Music

The brainchild of three Stony Brook graduate students (supervised by Professors Schedel and Samaras), this proposal seeks to answer three questions: What would it sound like if you could compose an entire score by moving throughout a 3D space? What would it sound like if the musical material was different every time? What would it sound like if a computer could predict, not just react to your gestures and interact with you the same way you interact with it? The team will address these questions by synthesizing state-of-the-art AI research in neural networks, music information retrieval and computer vision, with the goal of taking key steps toward the development of a collaborative and generative musical system that can co-compose in real-time with a performer.

The first proposed application of this system will be in collaboration with developer Omkar Bhatt, composers Rob Cosgrove and Niloufar Nourbakhsh, and dancer/choreographer Marie Zvosec for a presentation sometime during the Fall 2020 semester.

Topic: Audified Cellular Automata as Musical AI

Team: Richard McKenna, Department of Computer Science and students

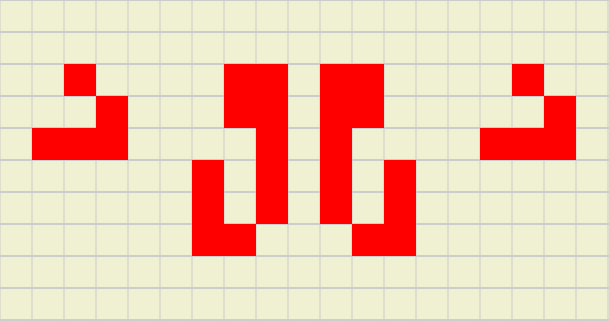

Professor McKenna's project intends to explore the audification of cellular automata as a music generating tool and to discover interesting new automata that serve music generation or digital music instrumentation. Inspired by the cellular automata found in English mathematician John Conway's "Game of Life" (figure shows common patterns in cellular automata found in Conway's Game of Life), McKenna plans to start with a standard Conway automata algorithm and then explore the many variations and ways those variations could be employed to generate sound for making instruments and then music.

Professor McKenna's project intends to explore the audification of cellular automata as a music generating tool and to discover interesting new automata that serve music generation or digital music instrumentation. Inspired by the cellular automata found in English mathematician John Conway's "Game of Life" (figure shows common patterns in cellular automata found in Conway's Game of Life), McKenna plans to start with a standard Conway automata algorithm and then explore the many variations and ways those variations could be employed to generate sound for making instruments and then music.

"There are so many interesting patterns that these automata generate that present recognizable visual patterns and so I'm hoping to use that fact to generate recognizable audio patterns as well," said McKenna.

A major goal of the AI and Music project is to increase collaboration between arts and science/engineering faculty. An AI and Music seminar series was instituted in the Spring of 2020, and attracted a mix of students and faculty across the disciplines. The seminar and these seeded projects will create an environment for further collaboration. A popular new undergraduate Digital Intelligence course brings Arts and Sciences students together with computer science students, leading to exciting collaborative projects.