The Computer Vision group in Stony Brook University’s AI Institute, has cracked the Top Ten in Computer Vision, nationally, according to CSRankings. CSRankings is “a metrics-based ranking of top computer science institutions around the world,” which seeks to recognize faculty and institutions who are fervently committed to computer science research. Other universities in the top ten of Computer Vision include Carnegie Mellon University, MIT, Stanford and UC Berkeley. CSRankings ranks institutions in more than two dozen areas of Computer Science. Stony Brook University’s Department of Computer Science, located in Stony Brook’s College of Engineering and Applied Sciences is currently ranked 30th overall.

"This Top Ten ranking in Computer Vision is a tribute to the AI Institute's successful hiring through the SUNY Empire Innovation Program,” said Steven Skiena, Director of the AI Institute. “We took a good program to one of the best in the country--and now we are in position to make it even better!"

The Computer Vision group faculty have been recognized with a number of best paper awards (from the IEEE Conference On Computer Vision and Pattern Recognition (CVPR), ACM Symposium on User Interface Software and Technology (UIST), International Conference on Robotics and Automation (ICRA), Institute of Industrial & Systems Engineers (IISE) Data Analytics and Information Systems and others) and funding grants (from the National Science Foundation, Yahoo Research, Amazon, National Geographic, National Cancer Institute, National Institute of Health and others). Most recently, Ling won the 2019 Yahoo Research Faculty and Research Engagement Program Award.

The Computer Vision group faculty have been recognized with a number of best paper awards (from the IEEE Conference On Computer Vision and Pattern Recognition (CVPR), ACM Symposium on User Interface Software and Technology (UIST), International Conference on Robotics and Automation (ICRA), Institute of Industrial & Systems Engineers (IISE) Data Analytics and Information Systems and others) and funding grants (from the National Science Foundation, Yahoo Research, Amazon, National Geographic, National Cancer Institute, National Institute of Health and others). Most recently, Ling won the 2019 Yahoo Research Faculty and Research Engagement Program Award.

The Department of Computer Science’s Computer Vision Lab was founded in 2001 by Professor Dimitris Samaras. The group’s faculty includes: Samaras (Director), Minh Hoai Nguyen, Allen Tannenbaum, Romeil Sandhu, David Xianfeng Gu, Haibin Ling, Michael Ryoo and Zhaozheng Yin. Ling, Ryoo and Yin recently joined the department as SUNY Empire Innovation Program professors. Prior to the opening of the Computer Vision Lab, Distinguished Professor Emeritus Theo Pavlidis led research on pattern recognition.

As Director, Samaras chooses projects deeply rooted in practical problems, to inform his group's theoretical advances. “As Computer Vision matures into a major component of the Artificial Intelligence technological revolution, the ability to understand and model problems from diverse domains will be paramount,” he said. Quite often his theoretical and technological advances have come from multi-disciplinary endeavors. For example, an important application area has been Medical Image Analysis where novel Machine Learning methods for the analysis of functional brain images and Digital Histopathology have been developed.

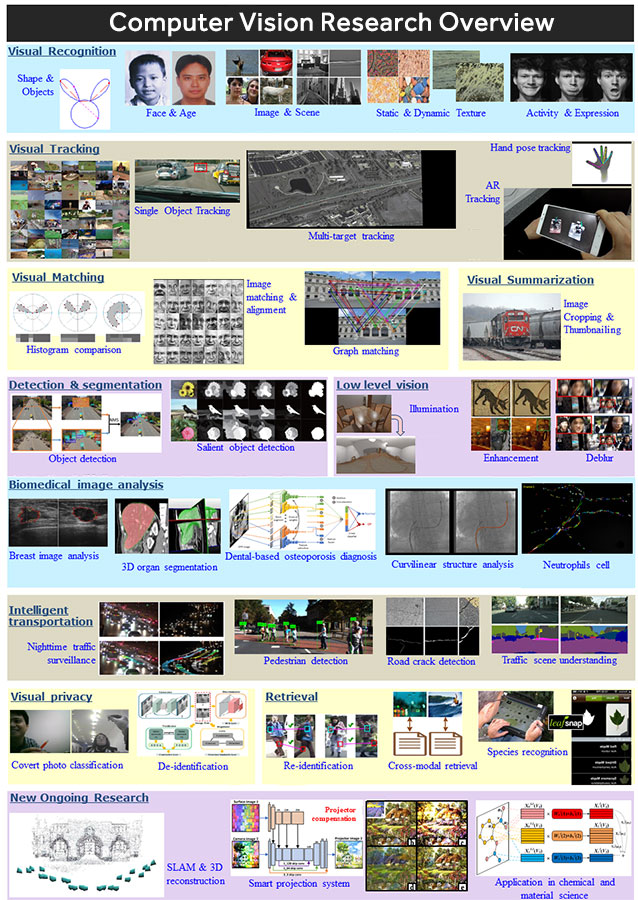

The group’s research encompasses a wide range of topics. Current work includes:

The group’s research encompasses a wide range of topics. Current work includes:

Samaras - Explaining visual data for Computer Vision, Computer Graphics and Medical Image Analysis, through the appropriate physical and statistical models. Application areas have been Face and Gesture Analysis, Human Activity Understanding, Shadow and Texture Classification.

Ling - Video Object Tracking, Image and Object Recognition, Visual Matching, Object Segmentation and Detection, Computer Graphics, Image Processing, Robotics, Human Computer Interaction and Augmented Reality. Federal- and industry-funded research includes Smart and Connected Health, Security and Privacy, Biodiversity Study and Surveillance.

Nguyen - Developing algorithms for Human Behavior Analysis (the actions or activities of a person or a group of people recorded on video), the interaction between hands and other objects, facial or head movements (to quantify a person’s emotional state) discovering the parts of an image or a video that attract visual attention, as well as predicting and manipulating visual attention. His analysis works on both recognition and prediction tasks.

Ryoo - Intelligent Robotics focusing on robot perception and robot action learning, enabling robots to recognize their surrounding environment as well as events/activities of interacting humans, and imitation of human actions by learning them from visual examples. He develops machine learning approaches for robots that bridge robots' visual input data to motor control (i.e., visuo-motor control policy learning). In collaboration with researchers at Google Brain, he has been working on representation learning for video data, discovering neural architectures with optimal connectivities for video understanding.

Yin - Working on Computer Vision and Machine Learning with a wide range of applications in Biomedical Image Analysis, Cyber Physical Systems, Human Robot Collaboration, Social Media Analysis, Security, and Surveillance.

For more information about the Computer Vision Lab at Stony Brook University, please visit their Website.