Stony Brook, NY, October 29, 2025 — Artificial intelligence may soon help scientists see Mars more clearly than ever before. At Stony Brook University, researchers have developed a system that can generate realistic, three-dimensional videos of the Martian surface — a tool that could reshape how space agencies simulate exploration and prepare for future missions.

Chenyu You, assistant professor in the Department of Applied Mathematics & Statistics and Department of Computer Science at Stony Brook University worked on the project, Martian World Models, tackling a problem that has long limited planetary research. Most AI models are trained on imagery from Earth, which limits their ability to interpret the distinct lighting, textures, and geometry of Mars. “Mars data is messy,” said You. “The lighting is harsh, textures repeat, and rover images are often noisy. We had to clean and align every frame to make sure the geometry was accurate.”

To overcome these limitations, the team built a specialized data engine, called M3arsSynth, that reconstructs physically accurate 3D models of Martian terrain using NASA rover images. The system processes pairs of photographs taken by the rovers to calculate precise depth and scale, building detailed digital landscapes that reflect the planet’s actual structure. These reconstructions then serve as the foundation for MarsGen, an AI model trained to generate new, controllable videos of Mars from single frames, text prompts, or camera paths.

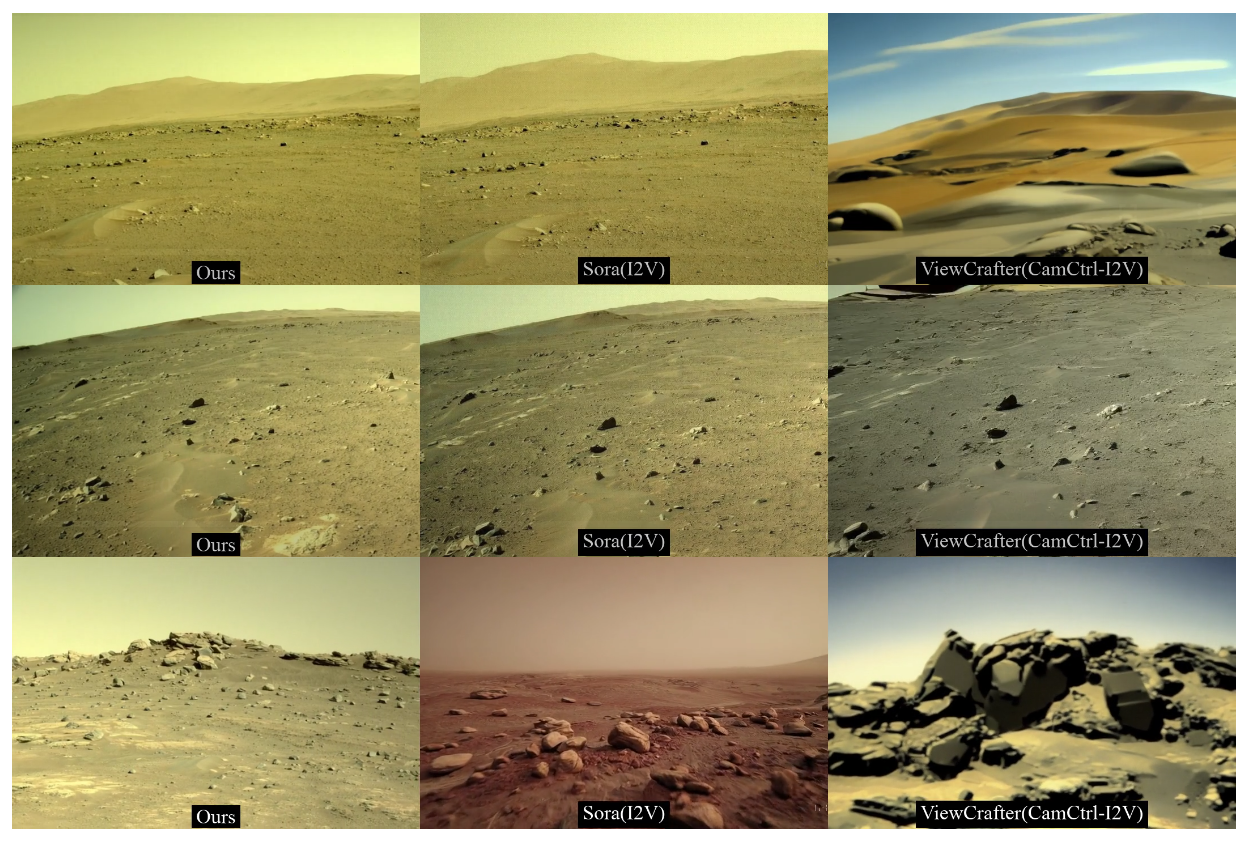

The result is a system that produces smooth, consistent video sequences that capture not only the appearance of Martian landscapes but also their depth and physical realism. “We’re not just making something that looks like Mars,” You said. “We’re recreating a living Martian world on Earth — an environment that thinks, breathes, and behaves like the real thing.”

Achieving that level of fidelity required an unusual degree of human oversight. The team manually cleaned and verified each dataset, removing blurred or redundant frames and cross-checking geometry with planetary scientists. “Preparing a large-scale Mars dataset is a very challenging problem,” You said, “but once the data are right, the model can produce stable, convincing videos.”

The results speak for themselves. Early tests show that the system generates clearer, more consistent visuals than commercial AI video tools trained on Earth data. It also preserves the subtle interplay of light and shadow that defines Martian landscapes — something most AI models ignore. The researchers evaluated image quality through standard visual metrics and expert validation, confirming their model’s reliability and realism.

Still, You sees this as a starting point. His team is already exploring how to model environmental dynamics — the movement of dust, the variation of light — and how to improve the system’s understanding of terrain features. “We want systems that sense and evolve with the environment, not just render it,” You said.

The work could significantly advance how space missions are planned and rehearsed, allowing scientists to test navigation systems and analyze terrain before a rover ever lands. By bridging the gap between visual fidelity and physical accuracy, ‘Martian World Models’ points toward a future in which planetary exploration begins long before launch.