Stony Brook researchers unveil FiG-Priv, a privacy protection framework that safeguards sensitive information while helping maintain the utility of visual assistants.

Stony Brook, NY, October1, 2025 — For blind and low-vision (BLV) individuals, visual assistant systems like BeMyAI and SeeingAI provide instant answers to visual questions, like “Can you tell me what this form says?” or “How much is my bill?” These applications advise users against capturing personally identifiable information, but it’s unavoidable for blind users to unintentionally include private objects in their questions. Some users need to ask about personal items, further raising privacy concerns.

A new study led by Stony Brook University, in collaboration with the University of Texas at Austin and the University of Maryland, introduces FiG-Priv, a framework that selectively conceals only high-risk personal information. Details such as account numbers or Social Security digits are hidden, while safe context, like a form’s type or a customer service number, remains visible.

Paola Cascante-Bonilla, co-author of the study and assistant professor of Computer Science at Stony Brook University, explained how it works, “Traditional masking techniques to protect sensitive information often blur or black-out entire objects. For blind and low-vision users, this is impractical. Masking too much destroys the utility of the content, while masking too little leaks sensitive data. FiG-Priv aims to allow BLV users to interact with AI systems without exposing personal information. It focuses only on the sensitive content.”

First, the system detects and segments private objects within an image, such as a credit card or financial statement. It then applies orientation correction, ensuring that text is aligned for reliable recognition. Specialized agents perform optical character recognition (OCR) and text detection, identifying every readable element.

Each text span is then scored using a risk model built on over 6000 real-world identity theft cases, mapping how exposing one detail (like an address) can lead to further leaks (such as stolen mail leading to bank fraud). Using this model, FiG-Priv assigns a risk score to each piece of information and masks only those with the highest likelihood of harm.

The final result is a redacted image in which risky content is clearly obscured with black squares, while the rest of the scene remains intact and interpretable by the visual assistant. Results show that FiG-Priv preserves 26% more image content than full-object masking. This allows visual assistants to provide 11% more useful responses and achieve up to 45% higher recognition accuracy for private objects.

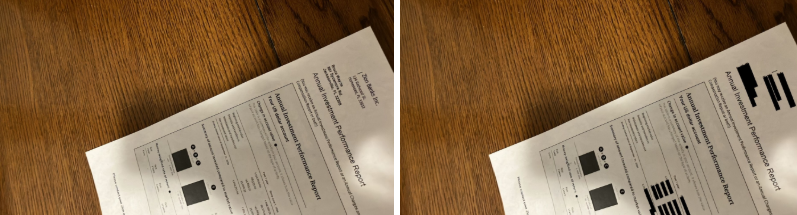

Left: The image shows an Annual Performance Report with the bank's name, the client's address, and bank details including deposits, withdrawals, etc. Risk Score: 100.0

Right: The image shows an an Annual Performance Report on a wooden surface. It includes data such as summary of the amount invested, but specific numbers and names have been removed for privacy. Risk Score: 0.

PhD student Jeffri Murrugarra-Llerena, the lead author, said, “Blind and low-vision users should be able to support both their independence and their privacy. In previous approaches, they were forced to choose one over the other. With our approach, users can ask questions more confidently, without worrying about what these systems might reveal.”

While the current model emphasizes financial risks, the research team is expanding FiG-Priv to address emotional and psychological concerns, such as sensitive medical or personal information that may cause distress if exposed. They also plan to incorporate direct feedback from BLV users and introduce adjustable privacy settings so users can decide how much information to reveal or conceal based on their own preferences.

Paola added, “This project marks my first work as a principal investigator at Stony Brook, and it’s been made possible through close collaboration with colleagues from other institutions. We designed FiG-Priv to take a deliberate, fine-grained approach, rather than leaving the task to general-purpose language models. The result is meaningful work that highlights the value of collaboration.”