SBU researchers train robots to detect hidden hazards around corners using single-photon LiDAR. Their project is the first to implement this navigation technology using commercially available, lightweight sensors.

Stony Brook, NY, September 24, 2025 — Stony Brook researchers have developed the first navigation system that lets robots “see around corners” with commercially available, lightweight sensors. Their method, recently presented at ICRA 2025, is paving the way for safer robots, self-driving cars, and delivery systems operating in cluttered and unpredictable environments.

In a breakthrough that could transform the safety of autonomous robots, researchers from Stony Brook have developed a way for robots to “see around corners” using single-photon LiDAR technology.

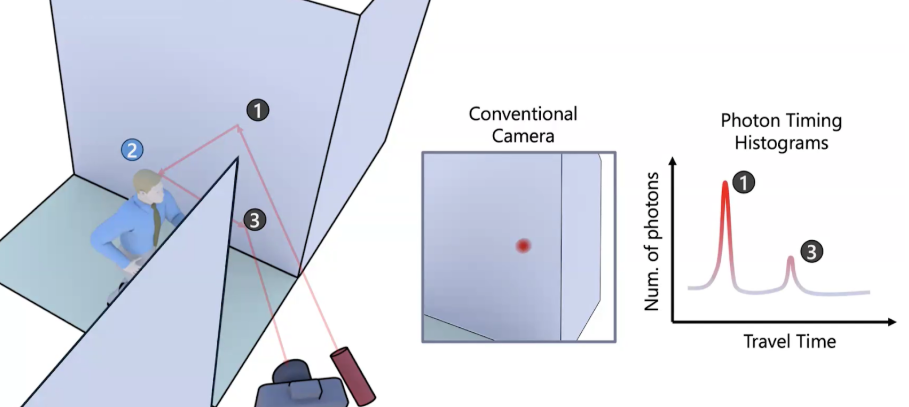

The idea was inspired by something familiar: the convex mirrors mounted at blind intersections. Those mirrors let drivers glimpse what’s otherwise invisible. “We asked ourselves, what if a robot could use walls the same way — by turning walls into mirrors?” said Akshat Dave, assistant professor of Computer Science at Stony Brook and former Postdoctoral Associate at MIT Media Lab, where he started the project. The team used single-photon LiDAR, a highly sensitive device that detects even the faintest traces of light after it bounces around corners.

Capturing those traces is only the first step. The robot also needs to understand them. For that, the team built a system that learns from the laser’s noisy reflections and creates a map of the hidden space. With that map, the robot can chart a safe path rather than waiting to react to surprises. “It’s like giving the robot a split-second of foresight,” said Dave, as he continues to work on this project at Stony Brook.

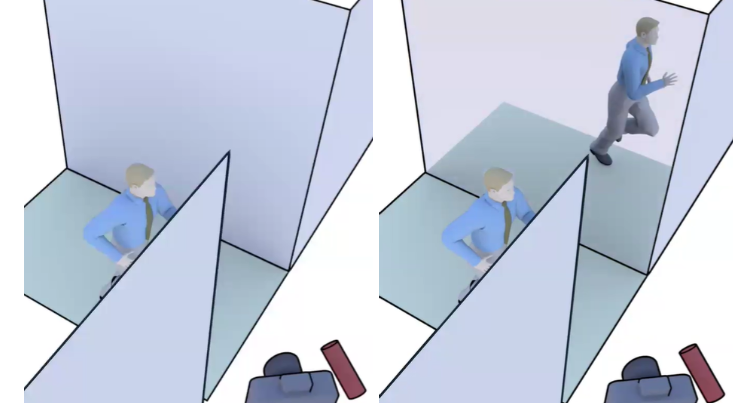

The researchers tested their system both in simulations and in the real world. In one experiment, a two-wheeled robot was sent down an L-shaped corridor where an object waited out of sight. A standard robot barrelled into the obstacle before correcting course. The new system, by contrast, took a smoother path, finishing in half the time and traveling one-third less distance.

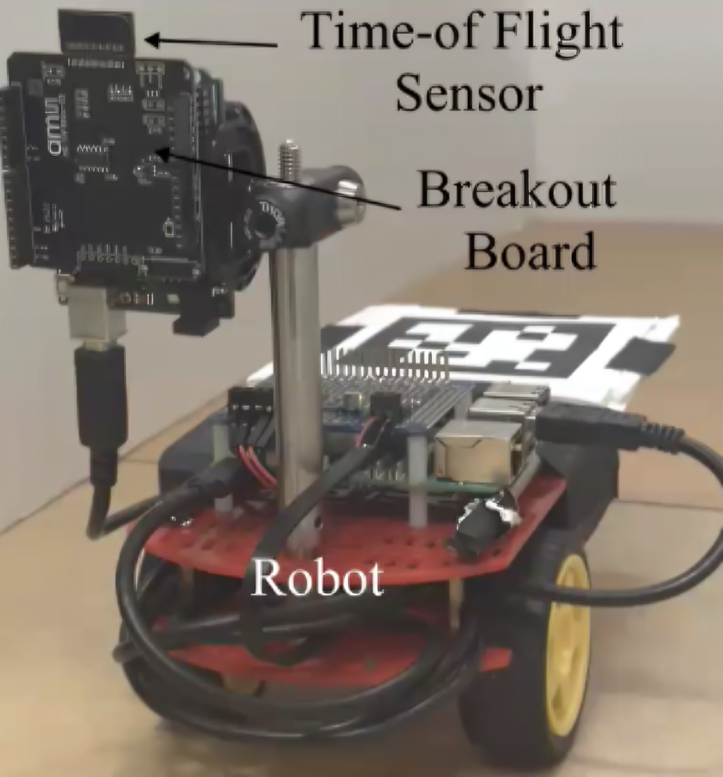

Previous NLOS imaging research has primarily focused on 3D reconstructions, and only with expensive, lab-grade sensors. The key advance here is that all this was achieved with a compact, commercially available sensor, not a lab-grade instrument. “Our work shows you can achieve this with commercially available hardware,” noted Dave. “That’s what makes this meaningful for real-world applications.”

There are still hurdles. Commercial single-photon LiDARs struggle with noisy signals. Moreover, the current system works best with reflective materials, and its accuracy drops in situations it hasn’t been trained on or encountered before. The team also plans to push the system beyond a single hidden object, developing strategies that can handle cluttered, multi-target environments. Dave added, “The aim is to move from a structured lab setting to real-world, unstructured scenarios. We want to take this project beyond navigation, to challenges that pose real Non-Line-of-Sight problems, like teaching robots to lift hidden objects, exploring and mapping unreachable areas, and conducting search and rescue operations. These systems will be able to see the world in ways we do not.”

The project, titled Enhancing Autonomous Navigation by Imaging Hidden Objects using Single-Photon LiDAR, is supported by the National Science Foundation (CMMI-2153855) and the NSF Graduate Research Fellowship.