A critical next step in AI system development is applying common-sense knowledge to infer and react to a situation as a human would. When people communicate with each other, they don’t need to explicitly say everything about a situation to decide what an appropriate action would be. Humans can understand what is inferred, even when details are left out. Stony Brook researchers are leading a collaborative project to teach AI systems to make such interpretations.

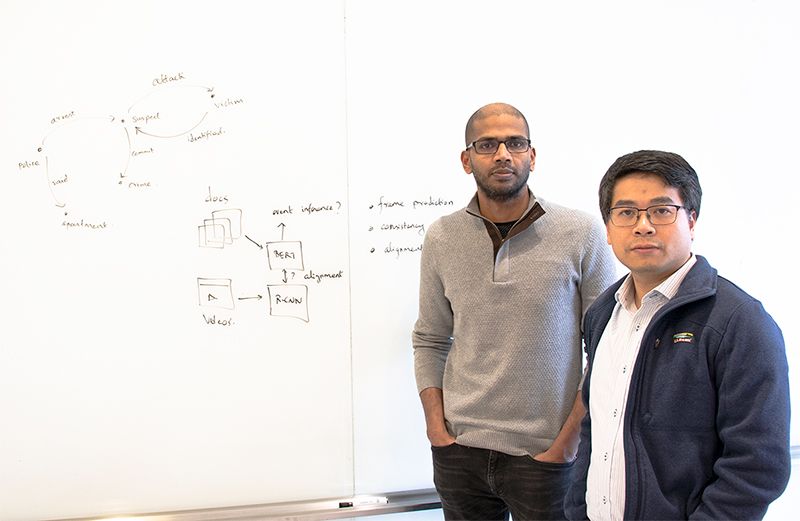

Department of Computer Science faculty members, Niranjan Balasubramanian (PI) and Minh Hoai Nguyen (Co-PI) along with PhD grad students Heeyoung Kwon and Mahnaz Koupaee, and researchers from the University of Texas-Austin, the University of Maryland Baltimore County and the US Naval Academy, have received a $4.2 million DARPA KAIROS (Knowledge-directed Artificial Intelligence Reasoning Over Schemas) award for the project, entitled “Structured Generative Models for Multi-modal Schema Learning.”

Department of Computer Science faculty members, Niranjan Balasubramanian (PI) and Minh Hoai Nguyen (Co-PI) along with PhD grad students Heeyoung Kwon and Mahnaz Koupaee, and researchers from the University of Texas-Austin, the University of Maryland Baltimore County and the US Naval Academy, have received a $4.2 million DARPA KAIROS (Knowledge-directed Artificial Intelligence Reasoning Over Schemas) award for the project, entitled “Structured Generative Models for Multi-modal Schema Learning.”

The researchers plan to design new machine learning algorithms that can combine information from news and videos to understand how to determine which events are core to a situation and which are incidental. Based on the human ability to understand real-world scenarios using common-sense expectations, this research aims at creating AI systems that can use a similar process to predict what is likely to happen and generate an appropriate response.

“If an AI system were to understand descriptions of situations and reason about them, they need to possess the kinds of common-sense knowledge about events that we humans possess. Scaling this gap is going to be key to the next set of breakthroughs in AI,” said Balasubramanian.

The cross-institution team assembled for this 54-month project includes some of the leading researchers in the field of learning event knowledge. They seek to create an AI system that can learn automatically from this type of rich common-sense knowledge about events from multimodal data: text, audio transcripts and videos.This project’s predictive-tracking tools will automate this process and help analysts decide when to provide critical services to the general population.

"The confluence of natural language processing and computer vision brings in exciting new angles in the project and we are looking forward to new discoveries," said Samir Das, Chair of the Department of Computer Science in the College of Engineering and Applied Sciences at Stony Brook.

About the Researchers

Niranjan Balasubramanian (PI) is an Assistant Professor in the Department of Computer Science at Stony Brook University and a faculty member of the AI Institute. His research is motivated by the challenge of building systems that can extract, understand, and reason with information present in natural language texts. His research interests are in two broad areas: NLP and information retrieval.

Minh Hoai Nguyen (Co-PI) is an Assistant Professor in the Department of Computer Science at Stony Brook University and a faculty member of the AI Institute. His research interests are in computer vision, machine learning and time series analysis with a focus on creating algorithms that recognize human actions, gestures and expressions in video.

Editor’s note: The research team would like to thank Kathryn Joines and Kristen Ford of the Stony Brook University Office of Sponsored Programs, the Stony Brook University Grants Management team for their invaluable help with the proposal, agreement and post-award support, and Christine Cesaria for her immense role in helping with the grant preparation and continued administration of it from the Computer Science side.