As higher ed institutions embrace generative artificial intelligence tools, Stony Brook University’s library is spearheading efforts to help students and faculty learn how to use them in an ethical, responsible way.

The inaugural director of the Artificial Intelligence Innovation Institute, or AI3, Varshney will arrive at Stony Brook University in August from the University of Illinois Urbana-Champaign, where he has been a faculty member in the Department of Electrical and Computer Engineering. Varshney believes Stony Brook’s growth and commitment to AI are on an upward trajectory. “There are a lot of interesting initiatives," he said, "and the new institute will hopefully bring them together." He hopes to collaborate with members of the campus from medicine, the arts and sciences, engineering, business, and atmospheric sciences to develop AI-driven solutions that have a positive impact on society.

Lav Varshney appointed inaugural director of Stony Brook's AI Innovation Institute, effective August 1. Varshney most recently served on the faculty of the University of Illinois Urbana-Champaign’s Department of Electrical and Computer Engineering. His background blends work in industry, academia, government, think tanks, and national laboratories. Varshney’s leadership is aimed at building on the university’s role as a core partner in Empire AI, New York State’s $250 million investment in AI and computing. He will also support further collaborations with industry and work to identify unexplored research areas.

Stony Brook University has introduced NVwulf, a cutting-edge high-performance computing (HPC) GPU cluster designed to boost advancements in artificial intelligence (AI), machine learning (ML) and data-intensive scientific research. The system became available to researchers who helped fund its purchase and their students for advanced testing on July 7, marking a significant upgrade in campus-wide computational capabilities.

Stony Brook researchers launch AI-powered recycling project to reduce waste contamination and improve sustainability.

Stony Brook, NY, July 6, 2025 — A new research initiative funded by Stony Brook University’s AI Innovation Seed Grant is reimagining how we tackle one of the most persistent problems in recycling: contaminated waste streams. By combining video footage and cutting-edge artificial intelligence, researchers aim to automate the analysis of recycling materials, reduce contamination, and lay the groundwork for smarter, cost-effective, and sustainable waste management.

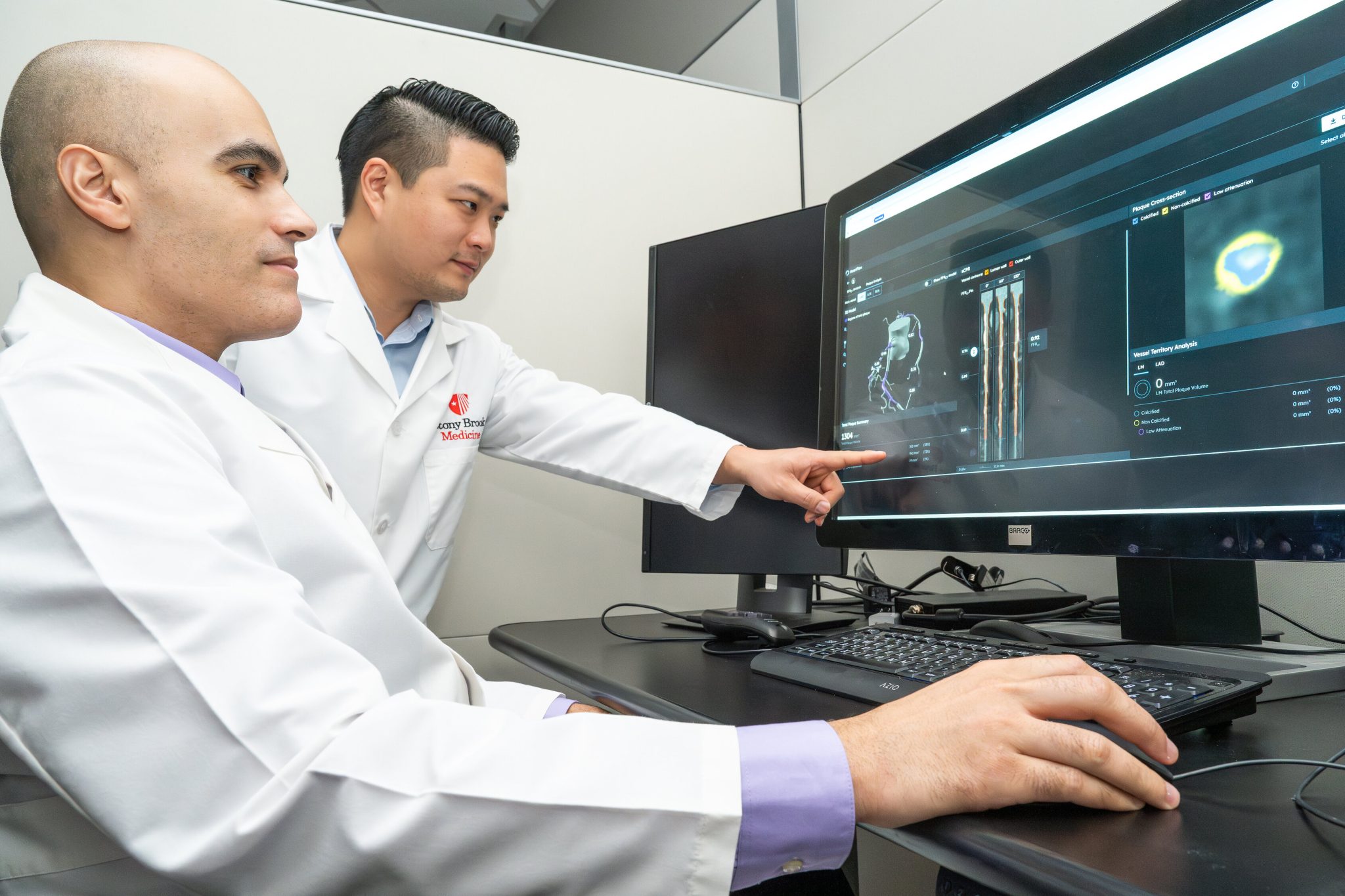

News 12 photojournalist Brian Endres shows how doctors use Heartflow Plaque Analysis software to better understand what each patient needs.

Stony Brook Medicine is the first on Long Island — and one of a select number of healthcare systems nationwide — to implement an artificial intelligence (AI) technology, HeartFlow Plaque Analysis™, to enable its physicians to more accurately understand the blockages present in the coronary arteries of patients with suspected heart disease. This advancement, introduced at Stony Brook through a collaboration by the Division of Cardiology and the Department of Radiology, represents a significant milestone in the fight against heart disease, which remains the leading cause of death for adults in the United States.