Critics warn that AI’s potential for bias, lack of true empathy, and limited human oversight could actually endanger users’ mental health, especially among vulnerable groups like children, teens, people with mental health conditions, and those experiencing suicidal thoughts.

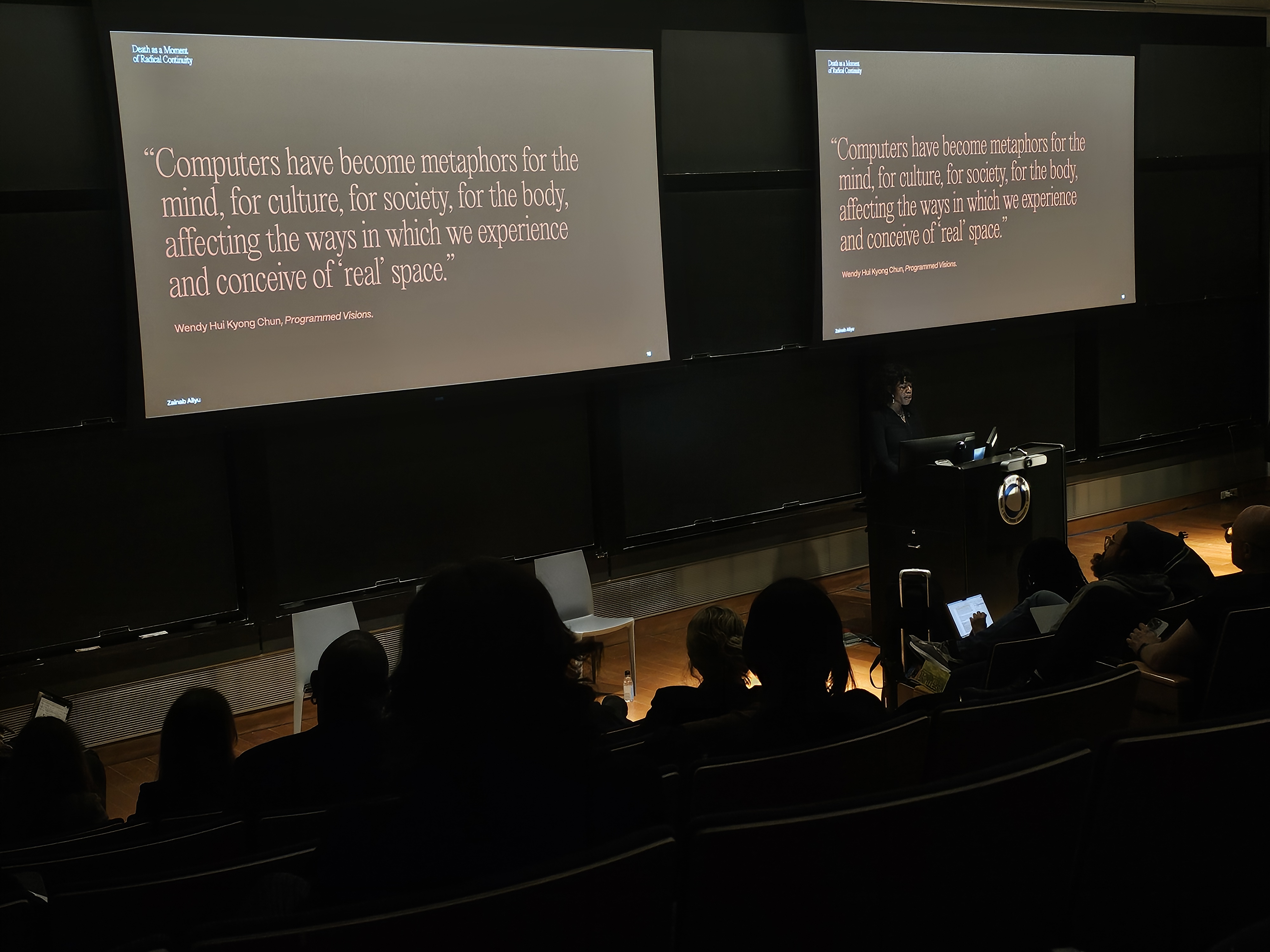

A new, cross-disciplinary course is launching in Spring 2026 that will take a multi-disciplinary approach to inquiring about the role of AI in our contemporary world.

How is artificial intelligence (AI) reshaping scientific research? As part of the “Conversations in Graduate Education” series, the Graduate School hosted a virtual discussion on Wednesday, November 6, featuring Lisa Messeri (Yale University) and Molly Crockett (Princeton University), co-authors of the Nature article “Artificial intelligence and illusions of understanding in scientific research.”

Stony Brook’s Office for Research and Innovation (OR&I) held its inaugural Wolf Den, an evening where investors, researchers, startup founders and business leaders came together to exchange ideas, foster collaboration and strengthen connections that drive technology development and economic growth across Long Island.

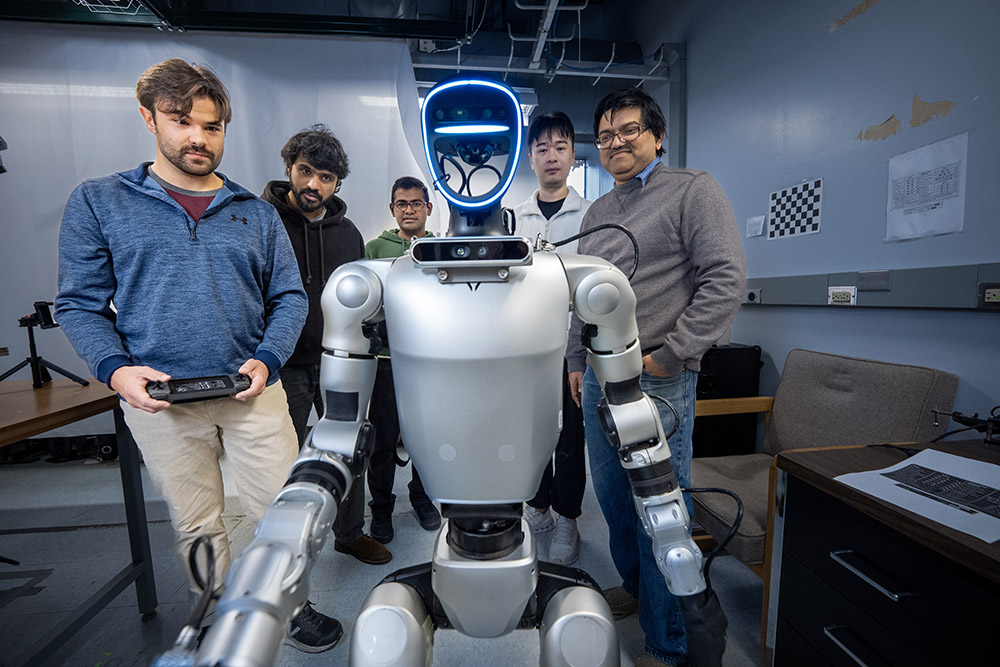

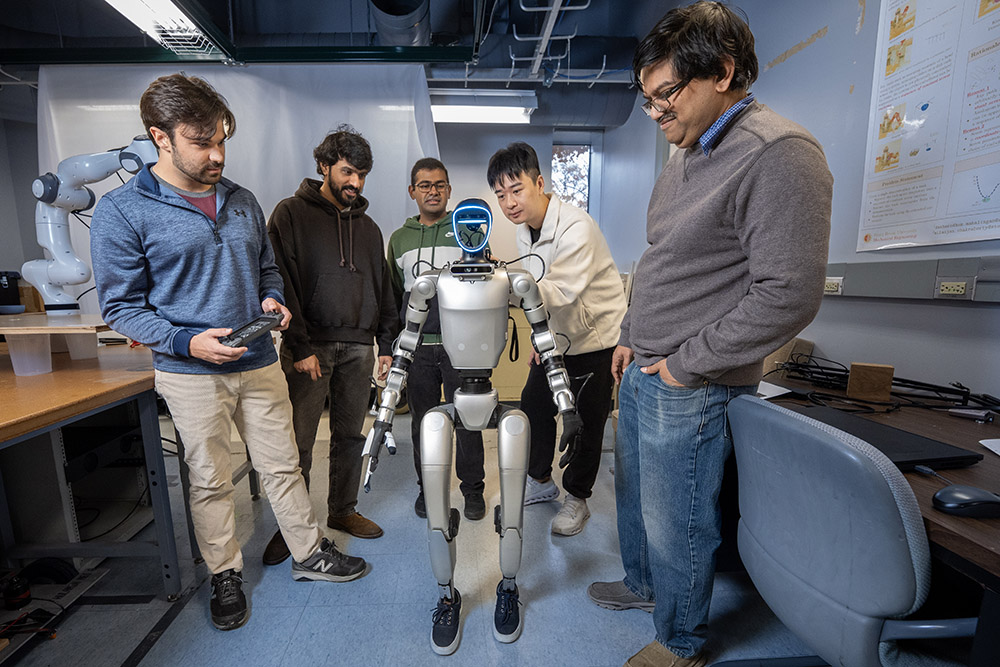

Stony Brook, NY, November 21, 2025 — In the beginning of Fall 2025, Lav R. Varshney joined Stony Brook University as Director of the AI Innovation Institute.

‘TII’ Will Advance Semiconductor Research and Workforce Development

State University of New York Chancellor John B. King Jr. today announced the launch of the SUNY – NY Creates Technology Innovation Institute (TII) to bolster future semiconductor research and workforce development.

For more than a decade, WolfieTank has celebrated innovation and bold thinking at Stony Brook University.

Modeled after the television show Shark Tank, the annual pitch competition invites students to present their entrepreneurial ideas to a panel of industry judges. Now in its 11th year, the event has grown into a showcase of creativity and collaboration across disciplines.

“It feels successful to me every year because we get to empower entrepreneurs and get them to really develop and showcase their work,” said David Ecker, director of iCREATE and the founder of WolfieTank. “We need to have a way of showcasing their entrepreneurial ideas, and this is a way.”